Audit log collection

With a simple configuration change, you can configure your PDPs to securely send audit logs to Cerbos Hub. This vastly simplifies the work that would otherwise be required to configure and deploy a log aggregation solution to securely collect, store and query audit logs from across your fleet.

For embedded PDPs, decision logs can be captured locally using the SDK’s onDecision callback. See ePDP SDK options for details.

Enabling collection

To get started, you need to obtain a set of client credentials. Navigate to the Settings → Client credentials tab of the deployment, click on Generate a client credential and generate a Read & write credential. Make sure to save the client secret in a safe place as it cannot be recovered.

The client credentials can be provided to the PDP using environment variables or the configuration file. The environment variables to set are:

CERBOS_HUB_CLIENT_ID

|

Client ID |

CERBOS_HUB_CLIENT_SECRET

|

Client secret |

CERBOS_HUB_PDP_ID

|

Optional. A unique name for the PDP. If not provided, a random value is used. |

Alternatively, you can define these values in the Cerbos configuration file as follows:

hub:

credentials:

pdpID: "..." # Optional. Identifier for this Cerbos instance.

clientID: "..." # ClientID

clientSecret: "..." # ClientSecretTo enable audit log collection, configure the hub audit log backend with a local storage path. This local storage path is important for preserving the audit logs until they are safely saved to Cerbos Hub. If there are any network interruptions or if the PDP process crashes, the audit logs generated up to that point are saved on disk and will be sent to Cerbos Hub the next time the PDP starts. If you’re using a container orchestrator or a cloud-based solution to deploy Cerbos, attach a persistent storage volume at this path to ensure that the data does not get lost.

server:

httpListenAddr: ":3592" # The port the HTTP server will listen on

grpcListenAddr: ":3593" # The port the gRPC server will listen on

hub:

credentials:

pdpID: "..." # Optional. Identifier for this Cerbos instance.

clientID: "..." # ClientID

clientSecret: "..." # ClientSecret

audit:

enabled: true

backend: hub

hub:

storagePath: "..." # Local storage path for buffering the audit logs.Refer to Cerbos documentation for details about common audit configurations that apply to all backends. You can configure an optional secondary output for the audit logs. This is useful for cases where you want to aggregate Cerbos audit logs into an existing silo for compliance and monitoring purposes while still taking advantage of the powerful features provided by Cerbos Hub to view and analyze log entries.

The following snippet demonstrates how to configure a secondary output that writes audit entries to standard output of the container.

audit:

enabled: true

backend: hub

hub:

storagePath: "..." # Local storage path for buffering the audit logs.

pipeOutput: # Secondary output configuration

enabled: true

backend: file # Any supported audit backend can be used here.

file: # Configuration for the file backend which is used by the pipeOutput configuration above.

path: stdout|

To quickly try out the Cerbos Hub audit logs feature, you can use the following command. |

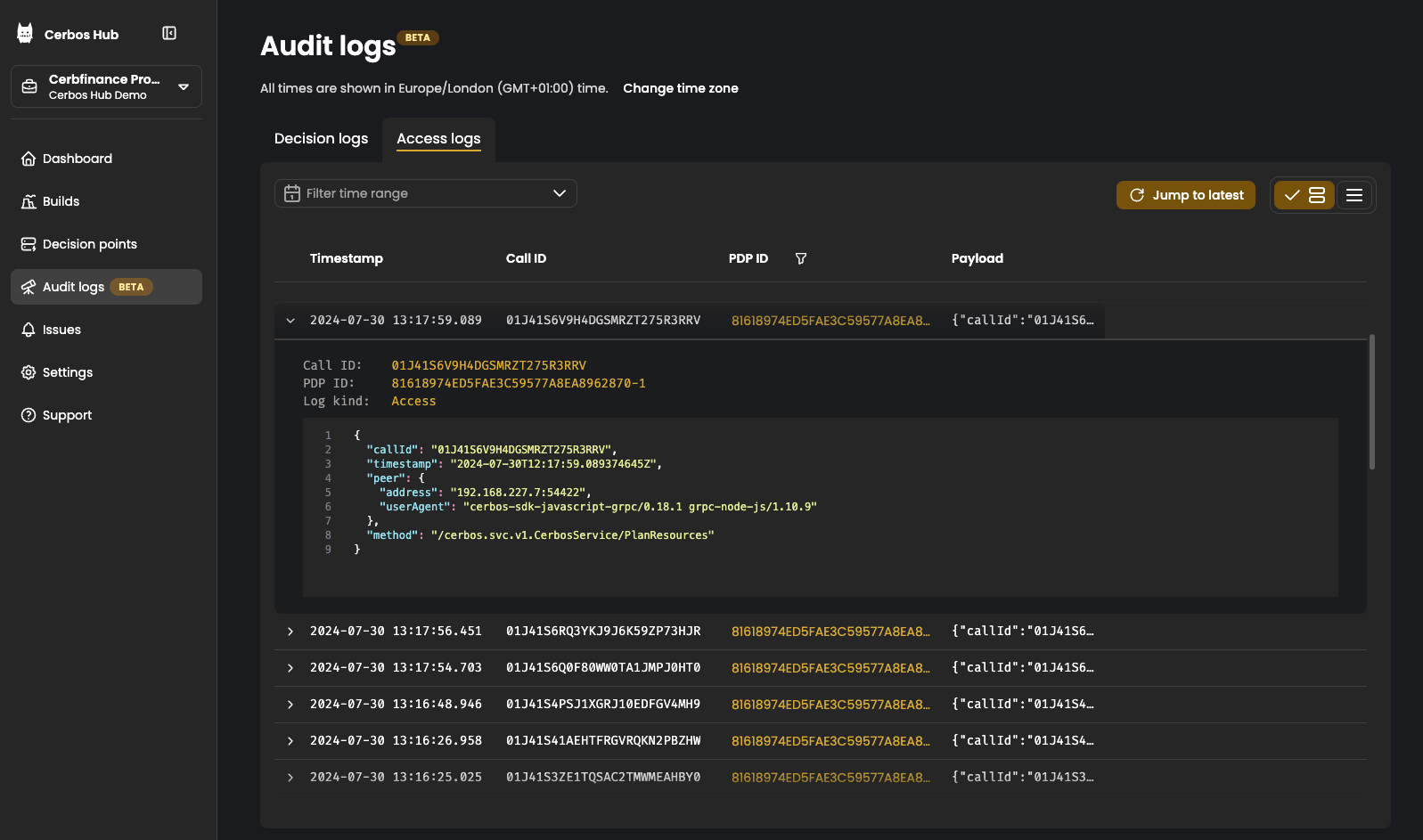

Accessing the logs

Once collection is enabled, the logs can accessed via the Audit logs tab in Cerbos Hub. From here you can select which logs to view:

Decision logs

The decision logs are records of the authorization decisions made by the Cerbos PDP. These logs provide detailed information about each decision, including the inputs, Cerbos policies evaluated, and the ALLOW/DENY decisions along with any outputs from rule evaluation. Besides providing a comprehensive audit trail, these records can be used for troubleshooting purposes and to understand how Cerbos policies are used within your organization.

The log list displays each entry with the timestamp, call ID, request kind (CheckResources or PlanResources), principal ID, resource kind, resource ID, and action decision. Toggle between the Decision view for a compact summary or JSON view for raw log data.

Log entry details

Click on any log entry to open the details panel, which shows:

-

Principal: The user or service that made the request, including their ID, roles, effective derived roles, and any attributes.

-

Resource: The resource being accessed, including kind, ID, scope, and attributes.

-

Decisions: The authorization outcome for each action, showing the effect (

ALLOWorDENY) and which policy produced the decision. -

Outputs: Any output values returned by policy rules.

-

Raw JSON: The complete log entry in JSON format.

Deep linking to policies

Policy names in audit log entries are clickable links. Click any policy name in the Decisions section to navigate directly to that policy in your policy store. This makes it easy to investigate authorization decisions and understand the policy logic behind each outcome without manually searching for the relevant policy file.

Access logs

The access logs are records of all the API requests received by the Cerbos PDP. The valid CheckResources and PlanResources API calls would have a corresponding decision log entry with the the same call ID. API requests that are invalid or unauthenticated are logged as well and can be used for identifying misconfigured clients or unauthorized access attempts.

You can filter the logs by a particular PDP and a time range.

Masking sensitive fields

You can define masks to filter out sensitive or personally identifiable information (PII) that might be included in the audit log entries. Masked fields are removed locally at the PDP and are never transmitted to Cerbos Hub.

Masks are defined using a subset of JSONPath syntax.

hub:

credentials:

pdpID: "..." # Optional. Identifier for this Cerbos instance.

clientID: "..." # ClientID

clientSecret: "..." # ClientSecret

audit:

enabled: true

backend: hub

hub:

storagePath: "..." # Local storage path for buffering the audit logs.

mask:

# Fields to mask from CheckResources requests

checkResources:

- inputs[*].principal.attr.foo

- inputs[*].auxData

- outputs

# Fields to mask from the metadata

metadata:

- authorization

# Fields to mask from the peer information

peer:

- address

- forwarded_for

# Fields to mask from the PlanResources requests.

planResources:

- input.principal.attr.nestedMap.foo

Use the local audit backend along with cerbosctl audit commands to inspect your audit logs locally and determine the paths that need to be masked.

|

In order to avoid slowing down request processing and to avoid any data losses, raw log entries are stored locally on disk at the storage path. The masks are applied later by the background process that syncs the on-disk log entries to Cerbos Hub. To avoid storing authentication tokens and other sensitive request parameters locally, use the top-level includeMetadataKeys and excludeMetadataKeys settings. Refer to Cerbos audit configuration for more details.

|

Advanced configuration

Advanced users can tune the local log retention period and other buffering settings. We generally do not recommend changing the default values unless absolutely necessary.

audit:

enabled: true

backend: hub

hub:

storagePath: "..." # Local storage path for buffering the audit logs.

retentionPeriod: 168h # How long to keep buffered records on disk.

advanced:

bufferSize: 16 # Size of the memory buffer. Increasing this will use more memory and the chances of losing data during a crash.

maxBatchSize: 16 # Write batch size. If your records are small, increasing this will reduce disk IO.

flushInterval: 30s # Time to keep records in memory before committing.

gcInterval: 15m # How often the garbage collector runs to remove old entries from the log.